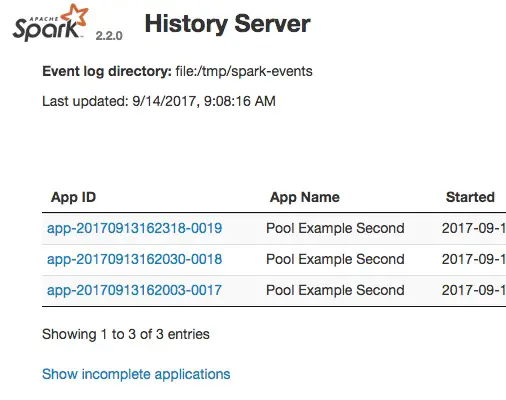

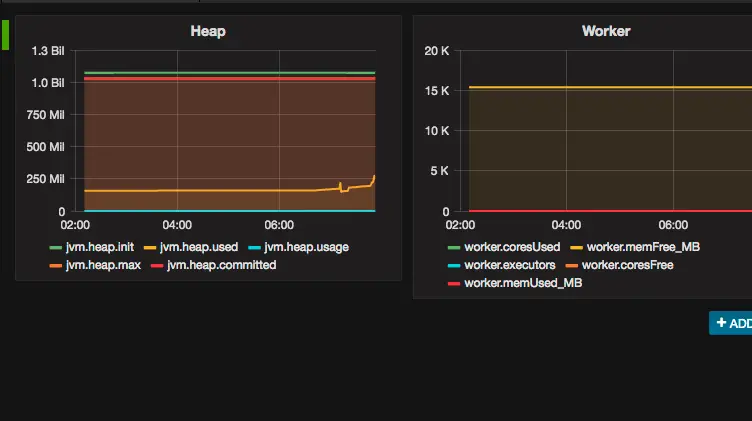

Mastering PySpark: Most Popular PySpark Tutorials

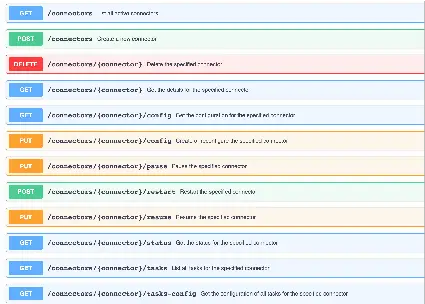

As the demand for data processing and analytics continues to soar, PySpark has emerged as a powerful tool in the data streaming landscape. Here on supergloo.com, a hub for Pyspark tutorials, there are insights to help users harness the full potential of PySpark. In this blog recap post, let’s explore the top five pyspark tutorials … Read more