Apache Spark with Cassandra Example with Game of Thrones

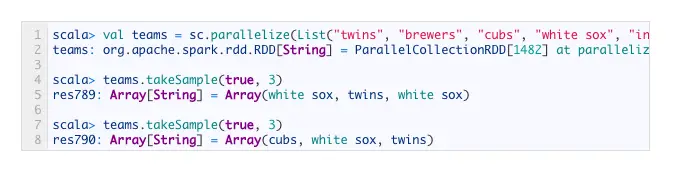

Spark Cassandra is a powerful combination of two open-source technologies that offer high performance and scalability. Spark is a fast and flexible big data processing engine, while Cassandra is a highly scalable and distributed NoSQL database. Together, they provide a robust platform for real-time data processing and analytics. One of the key benefits of using … Read more