Spark Streaming with Scala: Getting Started Guide

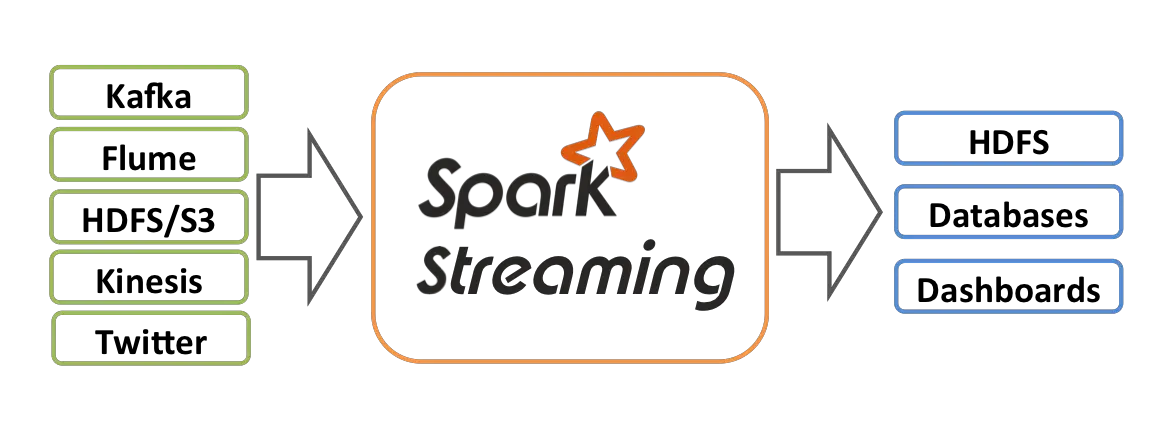

Spark Streaming enables scalable, fault-tolerant processing of real-time data streams such as Kafka and Kinesis. Spark Streaming is an extension of the core Spark API that provides high-throughput processing of live data streams. Scala is a programming language that is designed to run on the Java Virtual Machine (JVM). It is a statically-typed language that … Read more