How to Determine Kafka Connect License or Not?

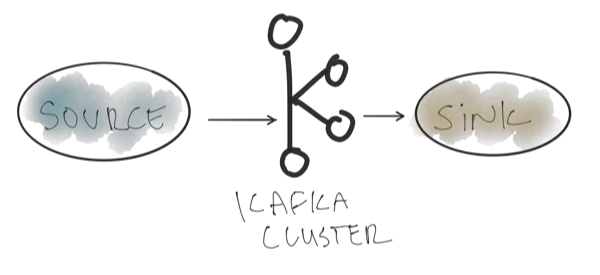

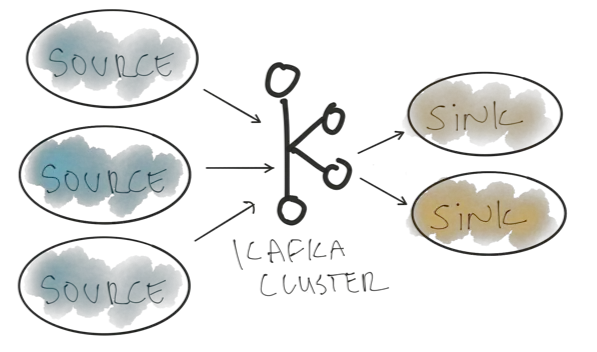

Determining whether a Kafka Connect source or sink connector requires a license to be purchased usually depends on the specific connector and its vendor. Because Kafka Connect itself is an open-source framework, but individual connectors may have different licensing models. Put another way, as a developer and/or operator or anyone trying to build and create … Read more