PySpark DataFrames are a distributed collection of data organized into named columns. It is conceptually equivalent to a table in a relational database or a data frame in R, but with richer optimizations under the hood.

DataFrames can be constructed from a wide array of sources such as: structured data files, tables in Hive, external databases, or existing RDDs.

In PySpark, DataFrames can be created from a local data set by using the spark.createDataFrame() method. You can also read data from a file into a DataFrame using the spark.read.format() method, and specifying the file format (e.g. "csv", "json", etc.) and the path to the file.

Once you have a DataFrame, you can perform various operations on it, such as filtering rows, selecting specific columns, aggregating data, performing inner and outer joins, and more.

Table of Contents

- PySpark DataFrames Examples

- How to Create PySpark DataFrames from Existing Data Sources?

- What makes PySpark DataFrames unique?

- What are the alternatives to PySpark DataFrames?

- What are possible drawbacks of PySpark DataFrames?

- Further Resources

PySpark DataFrames Examples

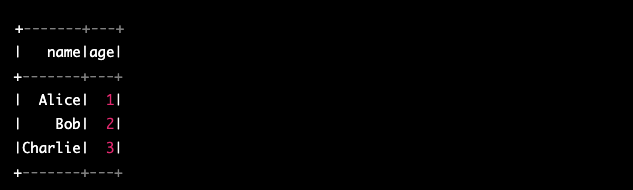

Here’s an example of how you can create and manipulate a DataFrame in the PySpark:

from pyspark.sql import SparkSession

# Create a SparkSession

spark = SparkSession.builder.appName("PySpark-DataFrames").getOrCreate()

# Load a local data set into a new DataFrame

data = [("Alice", 1), ("Bob", 2), ("Charlie", 3)]

df = spark.createDataFrame(data, ["name", "age"])

# Print the data from the constructed PySpark DataFrame

df.show()This would produce the following output:

Now that we have the df DataFrame constructed, we can run other PySpark transformation functions on it such as:

# Select only the "name" column from the PySpark Dataframe

df.select("name").show()

# Filter rows based on a condition

df.filter(df.age > 1).show()

# Group the data by the "age" column and compute the average "age"

df.groupBy("age").avg().show()All of these PySpark transformation examples and more are covered on this site. See links in the Resources section below.

How to Create PySpark DataFrames from Existing Data Sources?

There are many ways to create Dataframes in PySpark from existing sources such as CSV files, JSON, Database queries, etc. I’ve organized examples of creating dataframes into their own separate tutorials:

What makes PySpark DataFrames unique?

PySpark DataFrames are distributed across nodes in a Spark cluster. When you create a DataFrame in PySpark, the data is automatically distributed across the nodes in the cluster based on the partitioning strategy. Analyze large datasets in a distributed computing environment such as this can be much faster and more efficient than data processing on a single machine.

You can also specify the number of partitions for DataFrames, which determines how the data is distributed across the nodes in the cluster. By default, PySpark uses the default partitioning strategy, but this can overridden by specifying your own partitioning strategy.

What are the alternatives to PySpark DataFrames?

There are several alternatives to PySpark DataFrames used for data manipulation and analysis tasks such as the following:

Pandas: Pandas is a popular Python library for working with tabular data. As expected when considering alternatives to PySpark DataFrames, Pandas provides data manipulation and analysis capabilities, especially suited when working with small to medium-sized datasets.

Dask: Dask is a flexible parallel computing library. It can scale processing tasks across multiple CPU cores or distributed clusters. It provides a Pandas-like API to work with large datasets in a distributed environment.

RDDs (Resilient Distributed Datasets): RDDs were the original core data structure in Spark providing a distributed, fault-tolerant, and immutable data abstraction. RDDs are not as high-level as DataFrames, and require more code to perform common data manipulation tasks.

Apache Flink: Apache Flink is another popular open-source data processing framework provides powerful data processing capabilities, including support for distributed stream processing. Flink provides a DataSet API that is similar to PySpark DataFrames.

What are possible drawbacks of PySpark DataFrames?

Depending on your perspective, PySpark DataFrames may have some limitations and downsides. Some possible examples downsides may include:

- Complexity: PySpark DataFrames can be more complex to use than other tools, such as Pandas. This can be especially true for users who are not familiar with distributed computing concepts. PySpark requires a certain level of expertise and understanding of distributed computing principles.

- Performance: Believe it or not, PySpark may not be as fast as some other tools, such as Pandas, for certain types of data engineering tasks. PySpark is designed for distributed computing, which introduces some overhead and complexity compared to tools that are designed for single-machine processing. In other words, PySpark might be overkill in some situations.

- Steep learning curve: PySpark has a steep learning curve. It can take some time to become proficient with PySpark DataFrames and to learn how to use them effectively.

Further Resources

- Example of PySpark Filter

- Example of PySpark withColumn

- Examples of PySpark Transformations

- Official PySpark Documentation

Before you go

Make sure to check out the the PySpark Tutorials page for growing list of PySpark and PySpark SQL tutorials.