Spark Read JSON is a powerful capability allowing developers to read and query JSON files using Apache Spark. JSON, or JavaScript Object Notation, is a lightweight data-interchange format commonly used for data transfer.

With Spark read JSON, users can easily load JSON data into Spark DataFrames, which can then be manipulated using Spark’s powerful APIs. This allows users to perform complex data transformations and analysis on JSON data, without the need for complex parsing and data manipulation code. In addition, Spark Read JSON supports a wide range of JSON data formats, including nested and complex structures, making it a versatile tool for working with JSON data.

This Spark read json tutorial covers using Spark SQL with a JSON file input data source in Scala.

** The original tutorial was created for Spark 1.4, but it has been updated for Spark 3.4 as May 2023 **

If you are interested in using Python instead, check out Spark SQL JSON in Python tutorial page.

Table of Contents

- Spark SQL JSON Overview

- Spark Read JSON Example Tutorial Part 1

- Spark SQL JSON Example Tutorial Part 2

- Why use Spark to read JSON?

- Spark Read JSON and Query with SQL Further External Reference

Spark SQL JSON Overview

We will show examples of JSON as input source to Spark SQL’s SQLContext. This Spark SQL tutorial with JSON has two parts. Part 1 focus is the “happy path” when using JSON with Spark SQL. Part 2 covers a “gotcha” or something you might not expect when using Spark SQL JSON data source.

By the way, If you are not familiar with Spark SQL, a couple of references include a summary of Spark SQL chapter post and the first Spark read CSV in Scala tutorial.

Methodology

We will use a customers.json file as a simple file to get us started which I’ve called customers.json. Here is a link to customers.json file. In part 2, we use a more advanced example JSON file, but this Spark read json tutorial is designed to start simple intentionally.

Spark Read JSON Example Tutorial Part 1

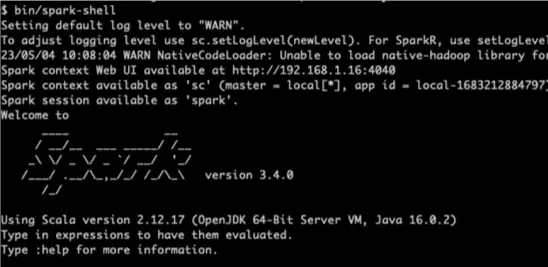

1. Start the spark shell

$SPARK_HOME/bin/spark-shell

2. Spark Read JSON Example

Next, in the spark shell which conveniently provides a SparkSession, we can use the spark.read.json function to load the example JSON file into a new DataFrame as shown:

scala> val customers = spark.read.json("customers.json")

customers: org.apache.spark.sql.DataFrame = [address: struct<city: string, state: string ... 2 more fields>, first_name: string ... 1 more field]The above code sample assumes you have customers.json in the same directory as from where the spark-shell script was called.

3. JSON DataFrame View

Next let’s register the JSON based DataFrame to a temp table to ease our future SQL queries as shown next:

scala> customers.createOrReplaceTempView("customers")4. Query JSON with SQL

We are now in a position to run some Spark SQL

scala> spark.sql("SELECT first_name, address.city, address.state FROM customers").show

+----------+-----------+-----+

|first_name| city|state|

+----------+-----------+-----+

| James|New Orleans| LA|

| Josephine| Brighton| MI|

| Art| Bridgeport| NJ|

+----------+-----------+-----+Ok, we started with a simple example, but the real world is rarely this simple. So, in part 2, we’ll cover a more complex example.

Spark SQL JSON Example Tutorial Part 2

If you run the customers.json from part 1 above through http://jsonlint.com, it will not validate.

You may be surprised to know that creating invalid JSON for Part 1 was intentional.

But why?

Two part answer.

Part 1 – we needed JSON source which works well to Spark read JSON and then query with Spark SQL quickly to have success and build momentum.

But, if you read the Spark SQL documentation closely:

Note that the file that is offered as a json file is not a typical JSON file. Each line must contain a separate, self-contained valid JSON object. As a consequence, a regular multi-line JSON file will most often fail.

Source: Apache Spark documentation

So, this leads to the question what happens if we have valid JSON?

In this part of the Spark read JSON tutorial, we’ll cover how to use valid JSON as an input source for reading from Spark and then query with Spark SQL.

For input, we’ll use a file called baby_names.json.

It looks like this:

[{

"Year": "2013",

"First Name": "DAVID",

"County": "KINGS",

"Sex": "M",

"Count": "272"

}, {

"Year": "2013",

"First Name": "JAYDEN",

"County": "KINGS",

"Sex": "M",

"Count": "268"

}, {

"Year": "2013",

"First Name": "JAYDEN",

"County": "QUEENS",

"Sex": "M",

"Count": "219"

}, {

"Year": "2013",

"First Name": "MOSHE",

"County": "KINGS",

"Sex": "M",

"Count": "219"

}, {

"Year": "2013",

"First Name": "ETHAN",

"County": "QUEENS",

"Sex": "M",

"Count": "216"

}]I saved this as a file called baby_names.json and is available from spark-shell.

It is available for download at: https://github.com/supergloo/spark-scala-examples/tree/main/spark-read-json

Spark Read JSON Part 2 Steps

1. Start the spark-shell

This example assumes the baby_names.json file is in the same directory as where spark-shell was started.

2. Spark Read JSON [Failure]

Let’s show an example which I know will fail for reasons previously described:

scala> val names = spark.read.json("baby_names.json")

names: org.apache.spark.sql.DataFrame = [_corrupt_record: string]I consider this a failure because of the _corrupt_record: string result. Let’s fix this next.

3. Spark Read JSON _corrupt_record How to Fix

To fix the previously failure of _corrupt_record in Spark read JSON attempt, we need to set the “multiLine” option as shown here

scala> val n = spark.read.option("multiLine", true).json("baby_names.json")

n: org.apache.spark.sql.DataFrame = [Count: string, County: string ... 3 more fields]

// now we can register a temp table and query with SQL

scala> n.createOrReplaceTempView("babies")

scala> spark.sql("SELECT * FROM babies").show

+-----+------+----------+---+----+

|Count|County|First Name|Sex|Year|

+-----+------+----------+---+----+

| 272| KINGS| DAVID| M|2013|

| 268| KINGS| JAYDEN| M|2013|

| 219|QUEENS| JAYDEN| M|2013|

| 219| KINGS| MOSHE| M|2013|

| 216|QUEENS| ETHAN| M|2013|

+-----+------+----------+---+----+So, there you go. We can solve _corrupt_record by using the “mutliLine” option set to true to fix.

By the way, this was much more difficult in Spark 1.

I use to have to send through a map function after loading the file with wholeTextFiles function with SparkContext. The wholeTextFiles method produced a PairRDD, and then map to create the new RDD using the value portion of the pair.

scala> val jsonRDD = sc.wholeTextFiles("baby_names.json").map(x => x._2)

jsonRDD: org.apache.spark.rdd.RDD[String] = MapPartitionsRDD[10] at map at <console>:21Then, we could use this RDD as JSON and confirm the schema

scala> val namesJson = sqlContext.read.json(jsonRDD)

namesJson: org.apache.spark.sql.DataFrame = [Count: string, County: string, First Name: string, Sex: string, Year: string]

scala> namesJson.printSchema

root

|-- Count: string (nullable = true)

|-- County: string (nullable = true)

|-- First Name: string (nullable = true)

|-- Sex: string (nullable = true)

|-- Year: string (nullable = true)

scala>But, luckily in Spark 2 and beyond, the “multiLine” option can make things much more simple.

Why use Spark to read JSON?

Here are some reasons why using Spark to read JSON can be beneficial:

- Efficient: Spark’s distributed computing architecture allows it to process large JSON datasets in a highly parallelized manner

- Flexibility: JSON data can have a complex and flexible schema as we saw above, which can be challenging at times. Spark’s ability to handle semi-structured and nested data can make working with JSON more flexible.

- Integration: Spark can integrate with other data sources, such as Hadoop Distributed File System (HDFS), Apache Cassandra, and Amazon S3. This makes it easy to read JSON data from these sources.

- Rich set of APIs: Spark provides a rich set of APIs for working with JSON data that evolved significantly over time to perform complex load and queries on JSON datasets.

- Scalable: Spark is designed to scale horizontally.

Overall, using Spark to read JSON data can provide a solution for processing large and complex JSON based datasets. .

Spark Read JSON and Query with SQL Further External Reference

- The Apache Spark documentation on using JSON https://spark.apache.org/docs/latest/sql-data-sources-json.html

- Source for JSON files used in this tutorial: https://github.com/supergloo/spark-scala-examples/tree/main/spark-read-json

Before you go, you may wish to check out Spark SQL with Scala and PySpark SQL tutorials.

This is a nice read. If you have some free can you guide me solve the below scenario ?

How do i process a file when it has multiple json records in a file ?. eg

{

name : “Test Name”

age : “10”

}

{

name : “Test Name2”

age : “50”

}

is there a way to process files like these ? Or do i have to write a custom inputformat like in hadoop for this ?

SQL contexts read.json will read thiis type of data too. just pass it to json method. and do your processing normally.

it was help full for me , how to access array elements in json schema . I would like to read promotions elements . please suggest

|– promotions: array (nullable = true)

| |– element: struct (containsNull = true)

| | |– promotionContent: struct (nullable = true)

| | | |– ID: string (nullable = true)

I had the same problem.

In spark you can use:

scala> val selectView = sqlContext.sql(“SELECT inline(promotions) FROM table_name”)

If you want saving the result in hive.

Execute the sentence:

scala>selectView.saveAsTable(“database.final_table”)

I hope this could help you.

what if I have a Json file which looks like this

{

“Name”: “Hafeez”,

“Type”: “Normal”,

“Lang”: “French”,

“tags”: [{

“1”: “Kid”,

“2”: “Path”,

“3”: “LCD”

}, {

“1”: “Brother”,

“2”: “Kole”,

“3”: “screen”,

“4”: 6

}]

}

and I need the schema to look like this

scala> namesJson.printSchema

root

|– Name: string (nullable = true)

|– Type: string (nullable = true)

|– Lang: string (nullable = true)

|– 1: string (nullable = true)

|– 2: string (nullable = true)

|– 3: string (nullable = true)

|– 4: Integer (nullable = true)

How can we fetch the column name as address.city (parent_column.child_Column) in spark SQL

What if I need to update/mask a field in JSON file, may be name, address fields?

I have tons of JSON files and the fields can also be nested to any levels, can spark be helpful in updating the PII fields? Any Idea would be helpful.