There have been some significant changes in the Apache Spark API over the years and when folks new to Spark begin reviewing source code examples, they will see references to SparkSession, SparkContext and SQLContext.

Because this code looks so similar in design and purpose, users often ask questions such as “what’s the difference” and “why, or when, choose one vs another?”.

Good questions.

In this tutorial, let’s explore and answer SparkSession vs. Spark Context vs. SQLContext.

Table of Contents

- Spark API History Overview

- What is SparkContext?

- What is Spark SQLContext?

- Should I use SparkSession, SQLContext, or SparkContext?

- Spark Examples on Supergloo.com

Spark API History Overview

In order to answer questions on differences, similarities and when to use one vs. the other in SparkSession, SparkContext and SQLContext, it is important to understand how these classes were released in history.

Let’s explore each of these now and recommendations on when and why will become much more apparent.

What is SparkContext?

SparkContext is the the original entry point for using Apache Spark. It was the main component responsible for coordinating and executing Spark jobs across a cluster. It shouldn’t be used anymore, but this will become apparent why as we progress in this article.

Responsibilities also included managing the memory and resources of the cluster and providing a programming interface for creating and manipulating RDDs (Resilient Distributed Datasets), a the fundamental data structure in Spark.

SparkContext was typically created once per application because you can have only one, so if you want more than one you need to stop any existing.

In addition to creating RDDs, SparkContext provides methods for manipulating RDDs, such as transforming RDDs using map, filter, and reduce operations.

Simple example of using SparkContext in Scala

import org.apache.spark.SparkConf

import org.apache.spark.SparkContext

object ExampleApp {

def main(args: Array[String]): Unit = {

// Configure Spark settings

val conf = new SparkConf()

.setAppName("ExampleApp")

.setMaster("local[*]")

// Create a SparkContext

val sc = new SparkContext(conf)

// Create an RDD and perform some transformations

val data = sc.parallelize(Seq(1, 2, 3, 4, 5))

val squared = data.map(x => x * x)

// Print the result

println(squared.collect().mkString(", "))

// Stop the SparkContext

sc.stop()

}

}See Scala with Spark examples on this site, if you are interested in more Scala with Spark.

Simple example of SparkContext in Python

from pyspark import SparkConf, SparkContext

conf = SparkConf().setAppName("PySparkApp").setMaster("local[*]")

sc = SparkContext(conf=conf)

# Create an RDD and transform

data = sc.parallelize([1, 2, 3, 4, 5])

squared = data.map(lambda x: x*x)

print(squared.collect())

sc.stop()In summary, SparkContext was the original entry point and the main component responsible for managing the execution of Spark jobs in the early days of Apache Spark.

See Python with Spark examples on this site, if you are interested in more Python with Spark.

What is Spark SQLContext?

SQLContext was introduced in Spark 1.0 as a replacement for SchemaRDD, which was the earlier API used to work with structured data in Spark.

SQLContext was originally designed, released, and has evolved over time, to assist in querying data stored in formats such as Parquet, JSON, CSV, and more using the SQL.

SQLContext also evolved beyond RDDs to provide access to DataFrames and DataSets related functions.

Simple example of SQLContext in Scala

import org.apache.spark.SparkConf

import org.apache.spark.SparkContext

import org.apache.spark.sql.SQLContext

import org.apache.spark.sql.functions._

object SQLContextExampleApp {

def main(args: Array[String]): Unit = {

val conf = new SparkConf()

.setAppName("SQLContextExampleApp")

.setMaster("local[*]")

val sc = new SparkContext(conf)

// Create an SQLContext with the SparkContext

val sqlContext = new SQLContext(sc)

// Create a DataFrame

val data = Seq((1, "John"), (2, "Naya"), (3, "Juliette"))

val df = sqlContext.createDataFrame(data).toDF("id", "name")

// Apply some SQL queries

df.select("name").show()

df.filter(col("id") > 1).show()

// Stop the SparkContext

sc.stop()

}

}Simple example of SQLContext in Python

from pyspark import SparkConf, SparkContext

from pyspark.sql import SQLContext

from pyspark.sql.functions import *

conf = SparkConf().setAppName("SQLContextExampleApp").setMaster("local[*]")

# Create a SparkContext

sc = SparkContext(conf=conf)

# Create an SQLContext with SparkContext

sqlContext = SQLContext(sc)

# Create a DataFrame

data = [(1, "John"), (2, "Naya"), (3, "Juliette")]

df = sqlContext.createDataFrame(data, ["id", "name"])

# Apply some SQL queries

df.select("name").show()

df.filter(col("id") > 1).show()

# Stop the SparkContext

sc.stop()Starting in Spark 2.0, SQLContext was replaced by SparkSession. However, SQLContext is still supported in Spark and can be used for backward compatibility in legacy applications.

For API docs on SQLContext, see https://spark.apache.org/docs/latest/api/scala/org/apache/spark/sql/SQLContext.html

What is SparkSession?

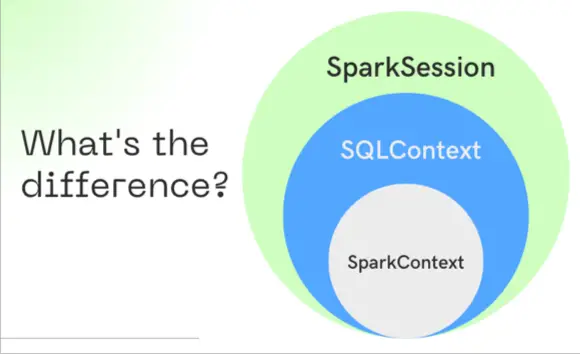

SparkSession is the unified entry point to use all the features of Apache Spark, including Spark SQL, DataFrame API, and Dataset API. It was introduced in Spark 2.0 as a higher-level API than SparkContext and SQLContext and provides a simplified, user-friendly interface for interacting with Spark.

As you can guess by now, it should be used in new Spark applications.

Similar to what we saw previous in SQLContext, SparkSession includes SparkContext as one of its components.

SparkSession continues the tradition of being responsible for managing the connection to the Spark cluster and creating RDDs, DataFrames, and Spark SQL, and running Spark jobs. It can be used to configure Spark settings and provides methods for to creating abstractions to various data sources, such as CSV files, JSON files, and databases, and in turn, writing data to those sources.

With SparkSession, you can work with both structured and unstructured data using the DataFrame and Dataset APIs or execute SQL queries against your data using Spark SQL.

In summary, SparkSession is the main entry point for using all the features of Apache Spark and provides a simplified, user-friendly interface for interacting with Spark. It allows you to work with both structured and unstructured data and provides support for various advanced features and integration with other big data technologies.

Simple example of SparkSession in Scala

import org.apache.spark.sql.SparkSession

// Create a SparkSession object

val spark = SparkSession.builder()

.appName("MySparkSessionApp")

.config("spark.master", "local")

.getOrCreate()

// Create a DataFrame from CSV

val df = spark.read.format("csv")

.option("header", "true")

.load("path/to/myfile.csv")

// Show the first 10 rows of the DataFrame

df.show(10)

spark.stop()Simple example of SparkSession in PySpark

from pyspark.sql import SparkSession

# Create a SparkSession object

spark = SparkSession.builder \

.appName("PySparkSparkSessionApp") \

.config("spark.master", "local") \

.getOrCreate()

df = spark.read.format("csv") \

.option("header", "true") \

.load("path/to/myfile.csv")

df.show(10)

# Stop the SparkSession

spark.stop()For API docs on SparkSession, see https://spark.apache.org/docs/latest/api/scala/org/apache/spark/sql/SparkSession.html

Should I use SparkSession, SQLContext, or SparkContext?

If you are working with Spark 2.0 or later, use SparkSession.

SparkSession includes functionality from SQLContext, so it can be used to work with structured data using Spark’s DataFrame and Dataset APIs. SQL queries can also be used directly on your data.

By the way, if interested in more examples and learning of Spark SQL, see either the Spark SQL in Scala or PySpark SQL sections on this site

-The “Spark Doctor” (not really an “official” doctor, more like an “unofficial” doctor)

Historically, SQLContext was the older entry point to Spark functionality and still available in Spark 2.0 and beyond. As we saw, SQLContext provides a way to work with structured data using Spark’s DataFrame and SQL APIs, but it does not include all of the functionality of SparkSession.

SparkContext is the lowest level entry point to Spark functionality and is mainly used for creating RDDs and performing low-level transformations and actions on them. If you are working with Spark 2.0 or later, it is recommended to use SparkSession instead of SparkContext.

In summary, if you are working with Spark 2.0 or later, it is recommended to use SparkSession as the entry point for all Spark functionality. If you are working with older versions of Spark, you may need to use SQLContext or SparkContext depending on your use case.

| SparkContext | SQLContext | SparkSession |

|---|---|---|

| The original | an advance | the latest |

| what you should be using, given the choice |

Spark Examples on Supergloo.com

Supergloo.com has been providing Spark examples since 1982, ok, ok, I’m kidding, but it has been a while… maybe 4-6 years. Given that history, you will find code examples of SparkContext, SQLContext and SparkSession throughout this site.

I’m probably not going to go back and update all the old tutorials. I kind of hope you don’t mind, but I mean, common, you don’t really have any right to complain that much.

But, you know, feel free to participate a bit, if you have a question, complaint, or suggestion to make things better, do let me know.