Apache Kafka includes a command-line tool named kafka-configs.sh used to obtain configuration values for various types of entities such as topics, clients, users, brokers, and loggers.

But, using this tool to determine current configuration values at runtime can be more difficult to use than you might expect.

This can be especially true if you want Kafka broker configuration values.

In this Kafka Configuration with kafka-configs.sh tutorial, I’ll cover common scenarios I’ve experienced over the years for brokers, topics, etc.

This post is for me for future reference as much as it is for you, so I’m going to try to have some fun here and may even poke fun at you from time to time. I hope you can take it.

Overview

When considering Kafka configuration, the context is usually focused on looking for settings of particular topic configuration or it may be determining the configuration of a Kafka broker.

For Kafka broker config, if you have access to the Kafka configuration setting files, then you can check there. No problem.

But, what if you don’t have access to Kafka broker config/ directory? Then, how do you determine?

Or, what about a particular topic? How do you view how a particular topic is configured?

This is what we are going to explore in this post with descriptions and examples.

If particular example you’re looking for isn’t found here, leave a comment below so I can help. Maybe.

Or even better, someone else can help by responding to your comment.

I’m guessing you know by now I’m a big-time Kafka tutorial writing big-shot who sings “biggy biggy biggy, can’t you see, sometimes your moves, just hypnotize me”. But, honestly, I like to spread-the-wealth of overall big-ness, so if questions and answers can be achieved in the comments below without my involvement, that would be big. Really big.

Table of Contents

- Overview

- Kafka Configuration Before We Begin

- How to kafka-config.sh

- kafka-config.sh demo requirements

- View Kafka Broker Configuration with kafka-config.sh

- View Kafka Topic Configuration with kafka-config.sh

- Further kafka-config.sh Reference

Kafka Configuration Before We Begin

We need to know there are two types of configuration properties in Apache Kafka: dynamic and static.

Properties specified at startup and remain fixed for the duration of the broker or client’s life are known as static configuration values. For brokers and clients, respectively, these properties are normally set in the configuration file or supplied as command-line arguments when the process is launched. broker.id, advertised.listeners, log.dirs, and other terms are examples of static configuration settings.

On the other side, dynamic configuration values are settings which can be changed while the Kafka broker or client is still running. That’s how they got the name “dynamic” 😉

Both the Kafka Admin API and the kafka-configs.sh utility allow for the modification of these properties. max.connections.per.ip, log.retention.ms, log.flush.interval.ms, and other parameters are examples of dynamic configuration values.

The main benefit of dynamic configuration values is ability to quickly change Kafka configurations without needing to restart. This can be very helpful when you need to temporarily alter configuration values or modify settings in response to shifting workloads or performance requirements.

Static configuration values, on the other hand, are normally set on initialization and remain the same throughout the runtime. By modifying the configuration file and restarting the process, static configuration values can be changed, although this can be disruptive and requires downtime.

How to kafka-config.sh

Managing the setup of Kafka brokers, topics, and clients is possible with the help of the shell script kafka-configs.sh, which is included with Apache Kafka in the bin/ directory. Kafka configuration settings can be viewed changed and accessed using it. We are going to use it in examples below.

kafka-config.sh demo requirements

When running through the examples, I’m going to start up Docker containers using docker-compose. Also, I’m going to run Kafka CLI commands from Kafka distributions I’ve downloaded and extracted.

The docker-compose files used in these Kafka config demos can be found at: https://github.com/supergloo/kafka-examples/tree/master/configuration-examples

Hopefully this will make sense in the examples below, but let me know if you have any questions or ideas for improvement.

To confirm, this is what I use in the examples:

- Extracted Kafka distribution version 2.4.1

- Extraced Kafka distribution verison 2.8.1

- Docker and docker-compose. See link above for the compose file if you wish to run.

View Kafka Broker Configuration with kafka-config.sh

The version of Kafka you are using will either greatly simplify, or frankly, complicate the approach to obtaining Kafka config values.

It’s much easier starting in Kafka 2.5 where KIP-524 was implemented. See links below for more information.

How-to View Broker Configuration < Kafka 2.5

Prior to Apache Kafka 2.5, it was only possible to view dynamic broker configs using the kafka-configs.sh.

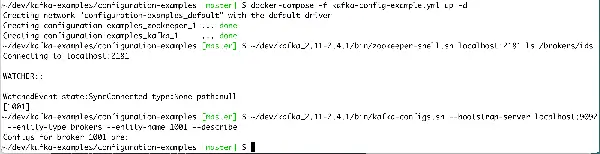

For example, when running kafka-config.sh from an Apache Kafka 2.4.1 distribution and a Kafka 2.4.1 cluster (link to docker-compose yaml file in github below)

docker-compose -f kafka-config-example.yml up -d- Determine the broker id with

~/dev/kafka_2.11-2.4.1/bin/zookeeper-shell.sh localhost:2181 ls /brokers/ids(in this example, you’ll see it’s 1001 below) ~/dev/kafka_2.11-2.4.1/bin/kafka-configs.sh --bootstrap-server localhost:9092 --entity-type brokers --entity-name 1001 --describe

The output is nothing as shown in the following screenshot.

But, if we change a dynamic config variable and then run the same command, we’ll be able to see the updated dynamic value.

~/dev/kafka_2.11-2.4.1/bin/kafka-configs.sh --bootstrap-server localhost:9092 --entity-type brokers --entity-name 1001 --alter --add-config 'log.cleanup.policy=delete'

Completed updating config for broker: 1001.and then re-run the previous kafka-configs with –describe option:

~ $ ~/dev/kafka_2.11-2.4.1/bin/kafka-configs.sh --bootstrap-server localhost:9092 --entity-type brokers --entity-name 1001 --describe

Configs for broker 1001 are:

log.cleanup.policy=delete sensitive=false synonyms={DYNAMIC_BROKER_CONFIG:log.cleanup.policy=delete, DEFAULT_CONFIG:log.cleanup.policy=delete}This is the expected behavior in < Apache Kafka 2.5. It gets much easier in newer versions.

How-to View Broker Configuration >= Kafka 2.5

Starting with Apache Kafka 2.5, obtaining all broker configuration variables becomes much, much easier by adding a “–all” option to kafka-config.sh.

For example, even though I’m still using a Kafka cluster running 2.4.1, if I use a 2.8.1 client, I can see all the static and dynamic variable configuration values as shown

~/dev/kafka_2.13-2.8.1/bin/kafka-configs.sh --bootstrap-server localhost:9092 --entity-type brokers --entity-name 1001 --all --describe

All configs for broker 1001 are:

advertised.host.name=null sensitive=false synonyms={}

log.cleaner.min.compaction.lag.ms=0 sensitive=false synonyms={DEFAULT_CONFIG:log.cleaner.min.compaction.lag.ms=0}

metric.reporters= sensitive=false synonyms={DEFAULT_CONFIG:metric.reporters=}

quota.producer.default=9223372036854775807 sensitive=false synonyms={DEFAULT_CONFIG:quota.producer.default=9223372036854775807}

offsets.topic.num.partitions=50 sensitive=false synonyms={DEFAULT_CONFIG:offsets.topic.num.partitions=50}

log.flush.interval.messages=9223372036854775807 sensitive=false synonyms={DEFAULT_CONFIG:log.flush.interval.messages=9223372036854775807}

controller.socket.timeout.ms=30000 sensitive=false synonyms={DEFAULT_CONFIG:controller.socket.timeout.ms=30000}

...

We even see which of the configuration variables are static or not. I snipped the output of the previous command, but further down in the results, we’ll see STATIC_BROKER_CONFIG results such as

num.network.threads=3 sensitive=false synonyms={STATIC_BROKER_CONFIG:num.network.threads=3, DEFAULT_CONFIG:num.network.threads=3}So, long story short, use a new Kafka CLI version to view the Kafka broker config.

As a side note, in case you are wondering more about how it’s possible to run a new Kafka CLI version (2.8.1) against an older Kafka Cluster (2.4.1) check out the determine Kafka version tutorial.

View Kafka Topic Configuration with kafka-config.sh

We experience the identical behavior as the previously described Kafka Broker Configuration examples when viewing Kafka topic configuration.

Create a new topic in our Kafka 2.4.1 cluster, for example

kafka-topics.sh --create --topic test-topic --bootstrap-server localhost:9092It doesn’t matter which version of Kafka kafka-topics.sh is used.

How-to View Kafka Topic Configuration < Kafka 2.5

When using a pre 2.5. version of kafka-configs.sh, again, we are not able to see much

~/dev/kafka_2.11-2.4.1/bin/kafka-configs.sh --zookeeper localhost:2181 --entity-type topics --entity-name test-topic --describe

Configs for topic 'test-topic' areAlso, special note on this example above is needing to use --zookeeper instead of --bootstrap-server.

Now, if we update a topic value, for example

~ $ ~/dev/kafka_2.11-2.4.1/bin/kafka-configs.sh --zookeeper localhost:2181 --entity-type topics --entity-name test-topic --alter --add-config 'cleanup.policy=compact'

Completed Updating config for entity: topic 'test-topic'.and re-run kafka-config.sh with --describe option

~ $ ~/dev/kafka_2.11-2.4.1/bin/kafka-configs.sh --zookeeper localhost:2181 --entity-type topics --entity-name test-topic --describe

Configs for topic 'test-topic' are cleanup.policy=compactHow-to View Kafka Topic Configuration >= Kafka 2.5

Again, much easier when the --all option is available from newer versions of CLI.

~ $ ~/dev/kafka_2.13-2.8.1/bin/kafka-configs.sh --bootstrap-server localhost:9092 --entity-type topics --entity-name test-topic --describe --all

All configs for topic test-topic are:

compression.type=producer sensitive=false synonyms={DEFAULT_CONFIG:compression.type=producer}

leader.replication.throttled.replicas= sensitive=false synonyms={}

min.insync.replicas=1 sensitive=false synonyms={DEFAULT_CONFIG:min.insync.replicas=1}

message.downconversion.enable=true sensitive=false synonyms={DEFAULT_CONFIG:log.message.downconversion.enable=true}

segment.jitter.ms=0 sensitive=false synonyms={}

cleanup.policy=compact sensitive=false synonyms={DYNAMIC_TOPIC_CONFIG:cleanup.policy=compact, DYNAMIC_BROKER_CONFIG:log.cleanup.policy=delete, DEFAULT_CONFIG:log.cleanup.policy=delete}

...Further kafka-config.sh Reference

https://stackoverflow.com/questions/55289221/getting-broker-configuration-via-kafka-configs-sh provides question and answer over time similar to how I did above.

https://github.com/apache/kafka/blob/trunk/core/src/main/scala/kafka/admin/ConfigCommand.scala is source code of what is eventually called by kafka-configs.sh

https://issues.apache.org/jira/browse/KAFKA-9040 is the origin of the --all option.

https://docs.confluent.io/platform/current/kafka/dynamic-config.html#dynamic-ak-configurations describes dynamic vs. static config vars in more detail.